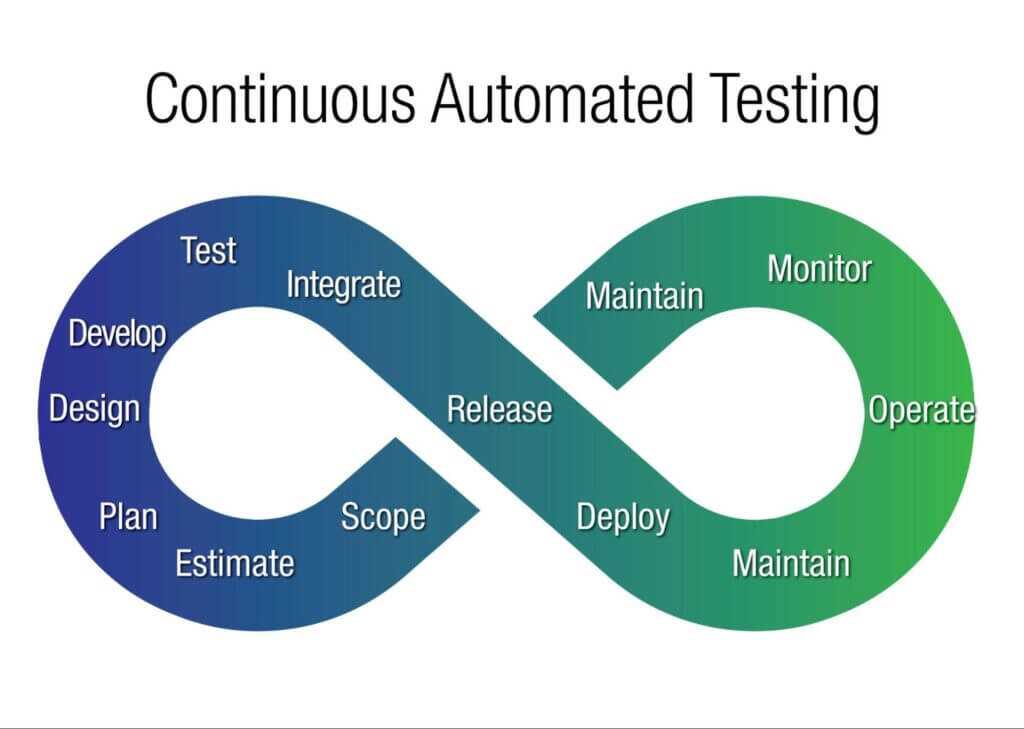

Optimizing Performance of Your Testing Team Velmurugan Kothandapani & ATMECS Content Team We live in a time where yesterday’s imagination has become today’s reality. Digital innovation, smart applications and machine intelligence are advancing at such a rapid pace, one may wonder: what happens between technological innovation, production/development and mass adoption of any new product? You may be surprised to know there is a tireless team of engineers who perform rigorous tests during any technology product development and deployment cycle to ensure innovation goes from labs to market swiftly. They are the Quality Assurance (QA) Team. Leaders of QA teams face a number of challenges implementing Test Automation “the right way” when the pace of innovation is so fast. Here are a few we have experienced first hand: Asking the right questions – early! The foundational paradigm of every testing team is to “Ask Better Questions” early on in the Software Development Life-Cycle (SDLC). A single flaw, albeit identified, late in the process could result in a higher cost. Needless to say, not catching a defect and, inadvertently, allowing it into production could result in a significant financial loss, company credibility and a loss of customer trust. Effective use of Artificial Intelligence The question is no longer whether or not to use AI but where AI should be deployed to get the best use out of it. As computing power, advancements in AI and debates on what a machine and man can or should do, grow everyday, it is important to demarcate roles and responsibilities of AI and people resources so that each one performs at their optimum for the advancement of human society. Here’s where business and IT leaders need to question whether liberating human testers from monotonous duties and allocating them to spend more time on exploratory testing is in the best interests of a company’s IT organization. After all, “The Art of Questioning” is what distinguishes humans from machines. Organizational Asynchronicity From sales and marketing to R&D, from development to testing, functional departments, more often than not, have their own KPIs and ways of functioning. This lends itself to teams working in silos following their own departmental SOPs. QA & Testing, while being the conscious keeper of any new product innovation, are often under prioritized. As a result, this leads to long product test life cycles, delayed product development, and delayed time to market. Challenges due to today’s global, digital world While the growth of digital technologies has enabled every company to make their product or service ubiquitous through global reach, it has also added a few headaches to Testing teams. Deploying test environments, on cloud vs on-prem and infrastructure challenges due to multiple customer touch points – platforms, devices, browsers – are all questions that keep testing teams up at night. Not to mention scalability issues when the volume of test modules, and test suites grow. Cumbersome Testing Framework Development Developing a Testing Framework while on-boarding an automation project is both time consuming, cost and resource intensive. It requires nuanced programming skill sets and versatile developers to be part of the framework development cycle. Absence of Right Tools Given the plethora of current and future challenges faced by a business in the post-pandemic era, it is imperative for IT leaders to “empower” its testers by providing them with the “best in class” tools and technologies. More often than not the “Testing” function is likened to a “Black Box”. This is so because there is a lack of proper reporting solutions to enable visibility into test coverage and executive intervention/decision making Introducing ATMECS FALCON – A Test Automation Platform, Testers and Team Leaders Love to Use ATMECS engineers have studied the testing landscape in depth and have developed an out of the box unified continuous testing platform to support testing of and quickly automate Web UI, Web Services, Restful Services, Mobile in one elegant platform. Falcon – an AI powered, intelligent and automated testing platform – has made testing and automation both effective, efficient and enjoyable for testing resources and team leaders. With parallel execution enabled for large test suite runs and centralized reporting to monitor and analyze all project test results in an intuitive user interface, once dreaded activities are now seamless, easy to complete and pleasurable for testers both in-house and at our client deployments. Additionally, what used to take over a week to accomplish now takes less than 15 minutes with Falcon. With timely quality reporting, dashboards and alerts, Falcon helps key IT stakeholders informed and in control of their testing process while setting up engineering teams for successful completion and deployments. Since Falcon works seamlessly with cloud technologies, on demand and at scale, our clients have testified that with Falcon, quality is no longer a serial activity after engineering builds but a parallel activity that agile teams can depend on through the build cycles. Sneak peek at Falcon – Highlights One Tool for Web, Mobile Native Apps, Web Services (Restful, SOAP) AI powered Smart Locator Generator that generates locators automagically for the UI elements of both web, native mobile apps. AI powered self-healing test scripts to automatically fix and adjust to changes in UI AI powered PDF files comparison Test Data Support in XML, Excel, JSON, DB (relational, document based) Built-in integration with Jira, Continuous Integration tool (Jenkins). Built-in integration with SauceCloud, BrowserStack (Cloud based platform for automated testing) AI integration for speed and accuracy The suite also provides a Lean version (without integration with above tools) with all key features of the framework Supported browsers are IE, Chrome, Firefox, Opera & Safari, while supported operating systems are Windows, Mac, Linux (thanks to the flexibility of Selenium) Integrated Centralized Report Dashboard for leadership team Manual testers can also use this framework to automate, with minimal training and without an in-depth understanding of the tool / framework / programming Contact Us to Know More!